Paper written by: Bjorn Hartmann (Standford University)

Meredith Ringel Morris, Hrovje Benko, and Andrew D. Wilson (Microsoft Research)

Comments: Gus Zarych, Jo Anne Rodriguez

Summary:

This paper explores the use of a large interactive tabletop with wireless mice and keyboards. They start off by listing some problems that occur with interactive tabletops and go onto what can be used to solve them. One problem with a tabletop might be the direct touch implementation because it may limit the precision and responsiveness of the input. This is why we use styli now for certain devices that are too small for direct touch (ex. using our fingers) to navigate around an interface. Lately tabletops have become larger to where direct touch is more viable and can also allow more input devices to be used. This paper focuses on the use of mice and keyboards in order to interact with the table and each other so that it can provide higher precision, performance, and enable interaction with distant objects. The use of these devices will also minimize physical movement. The paper describes a scenario about a group of people doing research for a project. It describes how the table works and how they are able to interact each other. If someone uses a keyboard, there is a screen that pops up in front of them to where they can navigate and do search queries or write documents. Each person has a specific color on the tabletop to distinguish which input device they are using and have associated to their screen. If two keyboards are placed together then they are connected and they are able to jointly work on a screen. Then the paper goes on to tell about the different ways the devices can be linked and how the users can use them together as a group in order to edit/create projects.

One way to link a device is called Link-by-Docking which creates an associated by the proximity of the device. Wherever the keyboard is placed, one can drag the digital screen next to it so that they are linked. Another way is called Link-by-Placing which is simply placing a keyboard next to a digital screen that is already present on the tabletop in order to link them.

Contextual command prompt happens when there is no target screen, one can place a keyboard down on the table and a text box will appear above the keyboard. The box tracks where the keyboard is and interprets the meaning of the text that has been entered as to what it needs to do. Pose-based input modification allows you to place two keyboards together so that they are joined and enable join search queries. Not only are there ways to connect a keyboard to a digital screen on the table, or connecting two keyboards together onto one screen, there is also ways to connect a mouse to a specific keyboard and screen. Remote object manipulation allows a user to click the mouse, touch an object on the table and move them. One can also use two mice without having to manipulate the algorithm. Leader line locator helps with finding your cursor on a large tabletop amongst other user's cursors. In order to connect a keyboard with a mice there are two options again, link-by-proximity which you place a mouse next to the keyboard you want to link to, and link-by-clicking which is where you move the cursor to the area associated with a keyboard and click to associate the two devices together. A user can link one mouse to multiple keyboards by doing this as well.

Contextual command prompt happens when there is no target screen, one can place a keyboard down on the table and a text box will appear above the keyboard. The box tracks where the keyboard is and interprets the meaning of the text that has been entered as to what it needs to do. Pose-based input modification allows you to place two keyboards together so that they are joined and enable join search queries. Not only are there ways to connect a keyboard to a digital screen on the table, or connecting two keyboards together onto one screen, there is also ways to connect a mouse to a specific keyboard and screen. Remote object manipulation allows a user to click the mouse, touch an object on the table and move them. One can also use two mice without having to manipulate the algorithm. Leader line locator helps with finding your cursor on a large tabletop amongst other user's cursors. In order to connect a keyboard with a mice there are two options again, link-by-proximity which you place a mouse next to the keyboard you want to link to, and link-by-clicking which is where you move the cursor to the area associated with a keyboard and click to associate the two devices together. A user can link one mouse to multiple keyboards by doing this as well.

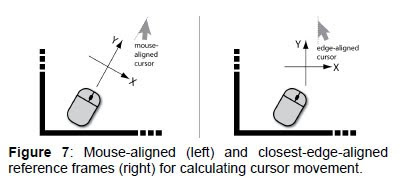

When using a tabletop with multiple users it is more efficient to use the frame choices that are available when using the interactive tabletop and input devices. To get the best precision from a mouse it is possible to create a reference frame that the mouse will interact with. Since the tables are becoming larger and it is easier to have multiple people working on them, using a frame like this will help one not move into someone else's area on the table.

When using a tabletop with multiple users it is more efficient to use the frame choices that are available when using the interactive tabletop and input devices. To get the best precision from a mouse it is possible to create a reference frame that the mouse will interact with. Since the tables are becoming larger and it is easier to have multiple people working on them, using a frame like this will help one not move into someone else's area on the table.For projects that require certain users working on certain parts, there is an option for a keyboard to ask for the user's credentials before beginning interaction. This will associate their IDs with whatever work they do.

Discussion:

I think this paper is interesting/significant because the generations of computers and electronic devices are becoming very touch oriented. I think this will help companies have a larger group being able to work with each other on the same project, in the same room without them having to be communicating through their cubicles (or offices). There could be some faults with the devices actually becoming associated with each other, or the way the interact with each other, but the idea seems to be pretty great. I think this could be turned into a business wide thing for certain companies because it can allow multiple people working on a project, and each person can be logged in to where all of their work is associated with their ID. The "bosses" can see what each person has worked on and can also do reviews for people by seeing the work that they have done, this could also be a bad thing though but in a way it could allow businesses to be more efficient.